This post was originally published on Piyanka Jain’s Substack.

It feels like we’re living in an AI whirlwind, doesn’t it? Every day, a new tool promises to revolutionize how we work, decide, and create. As much as it makes us excited for the brave new world of GenAI, all the larger-than-life promises also make us skeptical about the scope of the bravery and the newness of this world.

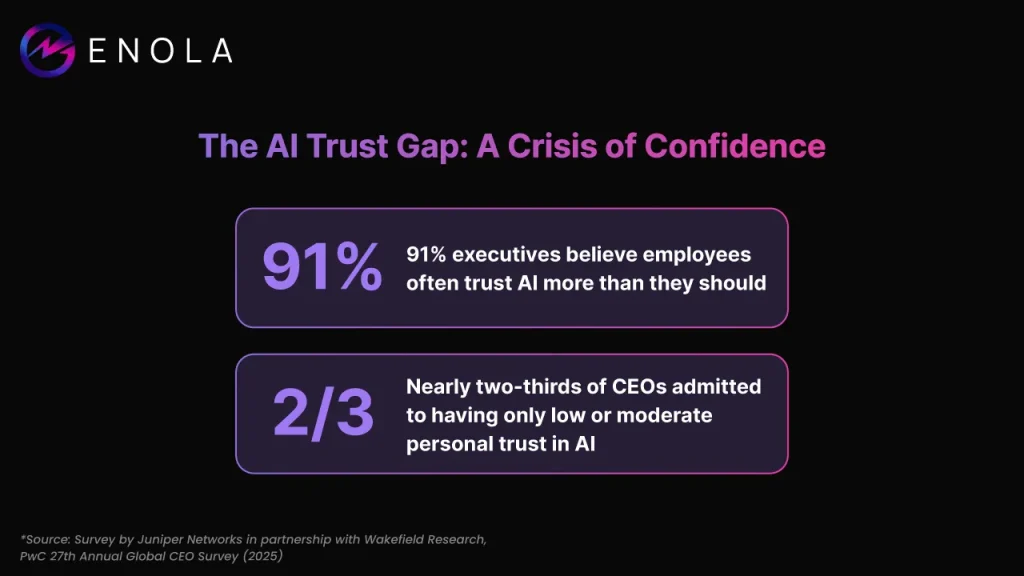

Business leaders are caught in a paradox: while companies are rapidly adopting AI to stay competitive, a healthy and growing skepticism is also taking root. This isn’t just a feeling; it’s a trend backed by data. A recent survey found that nearly nine in ten executives worry about verifying the accuracy of AI outputs, and 91% believe employees often trust AI more than they should.

This caution extends to the highest of levels. In a 2025 PwC survey, while many CEOs anticipated that generative AI would boost profits, nearly two-thirds admitted to having only low or moderate personal trust in the technology itself. The message is clear: while the promise of AI is undeniable, the rush to adopt it cannot ignore the foundational need for accuracy and trustworthiness.

The problem isn’t AI itself, but how we’re being asked to trust it: blindly.

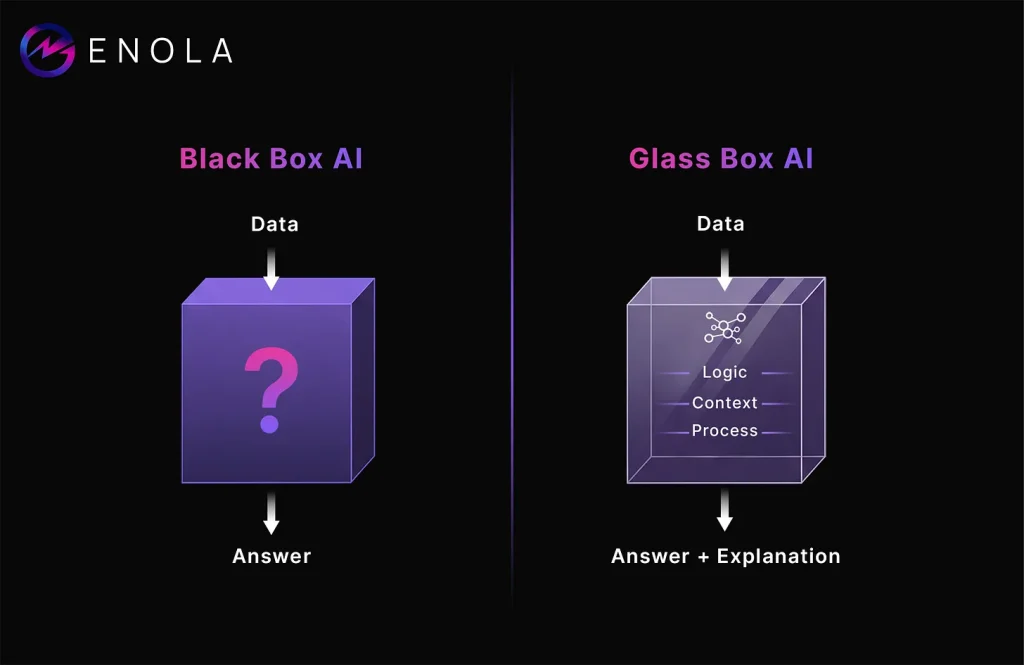

Many AI tools operate as “black boxes,” offering up answers without showing their work. It’s like a brilliant but secretive consultant who gives you a multi-million dollar recommendation but refuses to explain their reasoning.

Would you bet your business on that? I don’t think so.

The High Cost of a Guessing Game

The core of this distrust isn’t just the technology, but the data it relies on. This risk becomes tangible when you consider Gartner’s prediction that a staggering 85% of AI projects fail, largely due to poor quality inputs and irrelevant data. Analysts estimate that such failures can cost organizations as much as $12.9 million from issues ranging from lost sales to compliance fines.

Fast results mean nothing without accuracy.

An AI agent (or any other kind of AI solution, for that matter) that “hallucinates”—a phenomenon where it generates convincing but entirely false information—can cause serious damage.

Imagine an AI-powered inventory system for an e-commerce giant that hallucinates a spike in demand for a particular product. The company might over-order stock, leading to millions in warehousing costs for unsold goods. Or consider how an unchecked AI pricing tool could easily create a phantom fare, selling tickets for a fraction of their cost. This isn’t just a hypothetical risk; similar glitches, often called ‘mistake fares,’ have already cost airlines and other ticketing platforms millions and created customer service nightmares in the past.

Another classic example is AI in hiring. An algorithm trained on historical hiring data from a company with a pre-existing gender bias might learn to penalize resumes that include words associated with women, like “women’s chess club captain.” The AI isn’t malicious; it’s simply reflecting the flawed data it was given. Without transparency, you would never know this bias was steering your hiring decisions. The risk is simply too high when the stakes are real.

A Practical Guide: How to Know Which AI to Trust

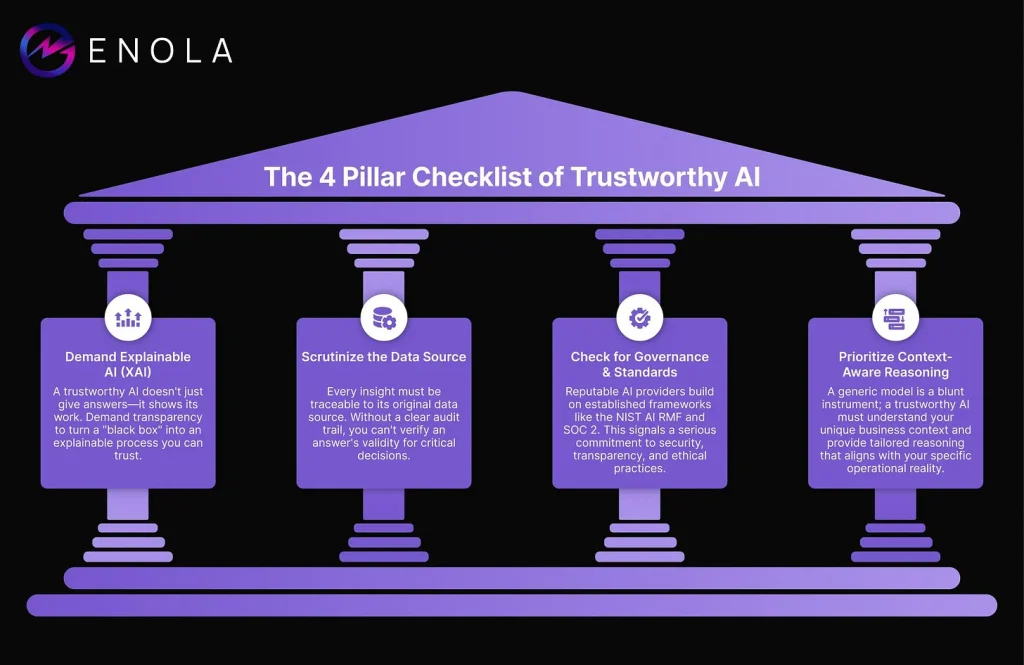

So, how do you navigate this new landscape? Trust in AI shouldn’t be a leap of faith. It should be earned through transparency and reliability. Here are 4 steps you can take to distinguish a trustworthy AI partner from a risky black box.

Demand Explainable AI (XAI)

A trustworthy AI should not just give you an answer; it should show you its work. This is the core idea behind Explainable AI (XAI). It’s the difference between a magic trick and a science experiment. Trust me, as a business leader, you want the science experiment, no matter how.

How to act on this: When you’re evaluating a new AI tool, don’t just settle for a flashy demo. Ask the vendor pointed questions. For instance: “If your tool predicts a customer is likely to churn, can you show me the top three factors that led to that prediction?” or “Can you expose the confidence score for this forecast, and what data points are lowering that score?” A trustworthy platform will let you see the feature’s importance, data lineage, and confidence levels—not just a final number on a dashboard. If the vendor can’t explain how their AI thinks, it’s a major red flag.

A truly transparent tool will not only show you the factors but also the confidence score and the underlying data—a foundational principle I built into tools like Enola.

Scrutinize the Data Source:

Adopt a “trust but verify” mindset. Every single insight from an AI must be traceable back to its source data. If an AI tool can’t show you the query it ran or the table it used, you can’t verify its validity. It’s an analytical dead end.

How to act on this: Make this a core part of your data culture. Encourage your team to ask, “Where did this number come from?” when presented with an AI-generated insight. A trustworthy tool will make this easy. If an AI dashboard shows that customer churn is increasing in a specific region, you should be able to click on that insight and see the underlying data: the customer IDs, the subscription dates, the usage logs, and the cancellation reasons. If you can’t trace the insight from the high-level conclusion all the way back to the raw data in your warehouse, you’re flying blind.

Check for Governance and Standards

Effective AI providers build their tools on established governance frameworks. This isn’t just jargon; it’s a signal that they take ethics, security, and reliability seriously. Look for adherence to global standards.

NIST AI Risk Management Framework (RMF): Developed by the U.S. National Institute of Standards and Technology, this is a voluntary framework that provides a structured process for managing risks associated with AI systems, ensuring they are trustworthy and responsible.

The EU AI Act: This is a landmark legislation that classifies AI systems by risk. High-risk systems (e.g., in medical devices or critical infrastructure) face strict requirements for transparency, data quality, and human oversight.

Gartner’s AI-TRiSM: This framework focuses on AI Trust, Risk, and Security Management, providing businesses with the tools to ensure their AI models are reliable, fair, and effective.

How to act on this: Check the provider’s website, security documents, and technical whitepapers. Do they mention these frameworks? Do they hold certifications like SOC 2 Type II or ISO 27001, which prove their commitment to data security? A provider that is serious about trust will be proud to share its adherence to these standards.

Prioritize Context-Aware Reasoning

A generic AI model is a blunt instrument. It might know what “revenue” is in a general sense, but does it understand how your business defines “Annual Recurring Revenue (ARR)” versus “Monthly Recurring Revenue (MRR)”? Does it know which of your sales channels are high-margin versus high-volume? The most valuable AI tools are those that learn your specific business environment.

How to act on this: During a product demo, test the AI with a question specific to your business and its unique jargon. Instead of asking, “What are our sales?” ask, “Compare our Q3 sales for the ‘Pro-Tier’ subscription in the EMEA region against our Q2 results, excluding the one-time bulk purchase from Global Corp Inc.” A generic AI will get stuck. A context-aware AI will understand the nuance, parse the specifics of your request, and deliver a genuinely useful insight.

From Theory to Practice

I know this all sounds great in theory, but putting it into practice can feel daunting.

After two decades in the analytics trenches, I grew frustrated watching smart business leaders get stuck between waiting for overworked analyst teams and trying to decipher cryptic dashboards. They were drowning in data but starved for clear, trustworthy answers.

This frustration is what led me to build Enola, an AI Super-Analyst designed from the ground up for trust. We embedded our proprietary BADIR™ framework into its core, ensuring every answer is explainable and aligned with real business goals. Enola connects directly and securely to your data warehouse, so your data never leaves your environment, and it shows its work for every analysis. It’s about making sure you can embrace AI’s speed without sacrificing the accuracy and clarity you need to make confident decisions.

The age of AI is here, and with it comes a healthy dose of skepticism. But that skepticism doesn’t have to lead to paralysis.

By asking the right questions, demanding transparency, and choosing tools built on a foundation of trust, you can harness the power of AI to move your business forward, faster and smarter than ever before. Don’t settle for magic tricks; demand an AI that is ready to show its work. Your business deserves nothing less.

FAQs:

How does Enola help businesses close the AI trust gap?

The AI trust gap exists because most companies adopt AI quickly but remain unsure about its accuracy and transparency. Blindly trusting black-box AI can lead to costly mistakes, like overstocking products or making biased hiring decisions.

Enola bridges this gap by combining speed with trustworthiness. It shows exactly how insights are generated, verifies them against your real business data, and aligns recommendations with your company’s definitions and goals.

By unifying fragmented data silos and exposing the logic behind every answer, Enola turns skepticism into confidence and helps leaders make fast decisions without sacrificing accuracy or security.

Explore Enola’s Sandbox to see how it closes the trust gap for your business data.

Why is explainable AI important, and how does Enola provide transparency?

Explainable AI (XAI) is crucial because it lets you see why an AI made a particular recommendation or prediction. Traditional black box AI tools might give you an answer with no reasoning, a situation no savvy leader would accept, much like a consultant who refuses to show their work.

Enola takes an open glass box approach. It does not just output a result, it shows its work. You can actually see the logic, context, and reasoning behind each answer. This level of transparency is how Enola earns trust. By turning hidden processes into clear explanations, Enola makes AI insights understandable and actionable for you and your team.

Learn more about Enola’s product features and how transparency is built into every analysis.

How does Enola ensure data accuracy and trace insights back to reliable sources?

Enola tackles this head-on by adopting a “trust but verify” philosophy with data. It connects directly to your company’s databases and data warehouse, automatically cataloging and unifying all your data sources and ending fragmented silos that lead to confusion.

In practice, this means you can click on any AI-generated number or conclusion and see exactly which records, tables, or queries it came from. There is no analytical dead end. This transparency in data lineage gives you confidence that Enola’s answers are not only fast, but grounded in truth.

Discover more about Enola’s BADIR™ methodology that powers this data accuracy.

What governance and standards does Enola follow to ensure responsible AI?

Enola’s platform aligns with established AI governance frameworks like the U.S. NIST AI Risk Management Framework and guidelines from the forthcoming EU AI Act, which emphasize transparency, fairness, and human oversight.

Enola follows industry best practices for data security and privacy. The platform can be deployed so that your data never leaves your environment, and it meets enterprise-grade security standards (for example, complying with SOC 2 and ISO 27001 certifications for data protection).

In short, Enola isn’t just smart and fast – it’s safe, compliant, and responsible, giving you peace of mind as you integrate it into your business.

Read more about Enola’s approach and vision for responsible AI.

How is Enola different from generic AI tools in understanding our business context?

Enola is not just another generic AI. It is designed as a context-aware AI Super Analyst that truly understands your business. Generic large language models might be brilliant at general knowledge, but they are blunt instruments for specialized business questions. They do not know your company’s terminology, metrics, or nuances.

Enola, on the other hand, learns from your actual data and definitions. It automatically learns your data catalog and business logic.

This means the answers you get are truly actionable, tailored to your data and your business strategy, rather than one-size-fits-all guesses. Enola’s ability to reason with context gives your team a competitive edge by uncovering the why behind trends, not just the what.

See how Enola’s product capabilities adapt to your business context.

How can Enola deliver insights faster and at lower cost than traditional analytics?

Traditionally, getting a complex data question answered meant waiting days or weeks for analysts to gather data from various silos, clean it, run analyses, and create a report.

This is a slow and expensive process prone to human error.

In contrast, Enola can do this heavy lifting in minutes. It automatically pulls together data from all relevant sources and applies advanced analytics and AI to answer your question almost instantly.

This speed does not come at the expense of quality. Enola’s insights are high quality because of the robust explainability and data verification behind them. The result is faster decision-making and a dramatically lower cost of insight. In essence, you are getting better outcomes for a fraction of the cost.

Try it yourself in Enola’s Sandbox and experience decision-ready insights in real time.